MySqlOperator, SqliteOperator, PostgresOperator, MsSqlOperator, OracleOperator, JdbcOperator, etc.SimpleHttpOperator – sends an HTTP request.PythonOperator – calls an arbitrary Python function.There are different types of operators available( As given on Airflow Website):

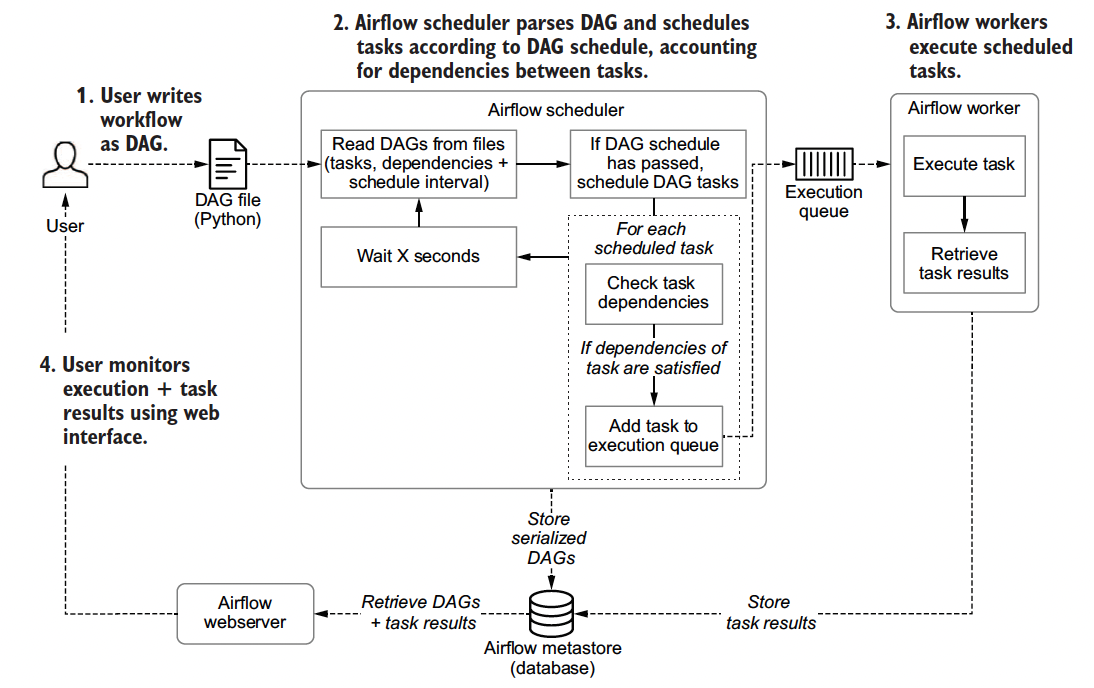

An operator defines an individual task that needs to be performed. OK, it’s lame or weird but could not find a better example to explain a directed cycle. Let me try to explain in simple words: You can only be a son of your father but not vice versa. Equivalently, a DAG is a directed graph that has a topological ordering, a sequence of the vertices such that every edge is directed from earlier to later in the sequence. That is, it consists of finitely many vertices and edges, with each edge directed from one vertex to another, such that there is no way to start at any vertex v and follow a consistently-directed sequence of edges that eventually loops back to v again. In mathematics and computer science, a directed acyclic graph (DAG /ˈdæɡ/ (About this sound listen)), is a finite directed graph with no directed cycles. For instance, the first stage of your workflow has to execute a C++ based program to perform image analysis and then a Python-based program to transfer that information to S3. Airflow is Python-based but you can execute a program irrespective of the language. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.īasically, it helps to automate scripts in order to perform tasks. Rich command line utilities make performing complex surgeries on DAGs a snap. The airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Use airflow to author workflows as directed acyclic graphs (DAGs) of tasks. What is Airflow?Īirflow is a platform to programmatically author, schedule and monitor workflows.

Airflow scheduler not starting update#

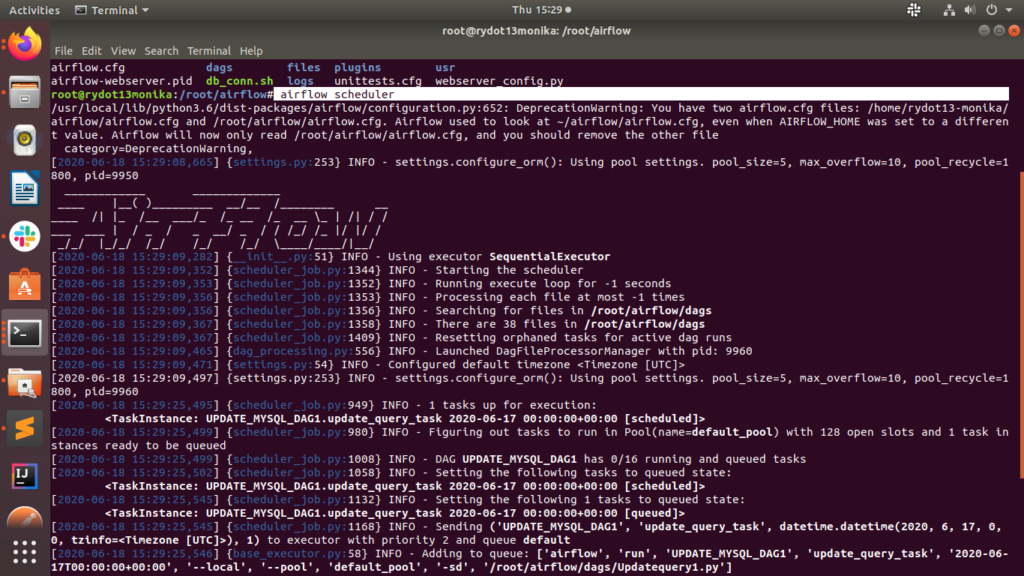

Periodically fetching data from websites and update the database for your awesome price comparison system.transferring data from one place to other.There are other use cases in which you have to perform tasks in a certain order once or periodically. Bonobo is cool for write ETL pipelines but the world is not all about writing ETL pipelines to automate things. In this post, I am going to discuss Apache Airflow, a workflow management system developed by Airbnb.Įarlier I had discussed writing basic ETL pipelines in Bonobo. WHERE dag_run.state = ? AND dag_run.run_id NOT LIKE ? AND task_instance.This post is the part of Data Engineering Series. [SQL: SELECT task_y_number AS task_instance_try_number, task_instance.task_id AS task_instance_task_id, task_instance.dag_id AS task_instance_dag_id, task_instance.execution_date AS task_instance_execution_date, task_instance.start_date AS task_instance_start_date, task_instance.end_date AS task_instance_end_date, task_instance.duration AS task_instance_duration, task_instance.state AS task_instance_state, task_instance.max_tries AS task_instance_max_tries, task_instance.hostname AS task_instance_hostname, task_instance.unixname AS task_instance_unixname, task_instance.job_id AS task_instance_job_id, task_instance.pool AS task_instance_pool, task_instance.pool_slots AS task_instance_pool_slots, task_instance.queue AS task_instance_queue, task_instance.priority_weight AS task_instance_priority_weight, task_instance.operator AS task_instance_operator, task_instance.queued_dttm AS task_instance_queued_dttm, task_instance.pid AS task_instance_pid, task_instance.executor_config AS task_instance_executor_configįROM task_instance JOIN dag_run ON task_instance.dag_id = dag_run.dag_id AND task_instance.execution_date = dag_run.execution_date : (sqlite3.OperationalError) no such column: task_instance.pool_slots When I'm doing airflow scheduler on my local machine, it's giving the following SQLAlchemy error:įile "/home/ubuntu/anaconda3/lib/python3.7/site-packages/sqlalchemy/engine/default.py", line 590, in do_execute

0 kommentar(er)

0 kommentar(er)